Kubernetes (also known as k8s) is an open-source orchestration system. It allows users to deploy, scale, and manage containerized applications with minimum downtime. In this tutorial, you will learn how to deploy a PHP Application on a Kubernetes Cluster.

Nginx behaves as a proxy to PHP-FPM while running a PHP application. Managing these two services in a single container is a difficult process. Kubernetes helps us manage them in two different containers and reduces the hassle. It also allows users to reuse the containers and not worry about building their container image for every new version of PHP/Nginx.

You will run your application and proxy service in two separate containers. The tutorial will also provide insights on how to use local storage to create a Persistent Volume (PV) and Persistent Volume Claim (PVC). You will then use this PVC to keep your configuration files and code outside of the container images. After completing this tutorial, you will be able to reuse your Nginx image for other applications that require a proxy server. You can achieve this by passing a configuration, instead of rebuilding the image for it.

Prerequisites

- A basic understanding of Kubernetes (k8s) and its objects. Refer to this guide for a detailed overview of the Kubernetes ecosystem.

- A Kubernetes cluster that is up and running on Ubuntu 18.04. Follow this tutorial to create your Kubernetes cluster using kubeadm.

- In addition, you need to host your application code on a public URL, for example, GitHub.

Step 1: Create PHP-FPM and Nginx Services

This step will help you create PHP-FPM and Nginx services. Any service provides the access to a set of pods within a cluster. All the services present in a cluster can communicate to each other with their names, without IP addresses. The PHP-FPM service and Nginx service will provide access to PHP-FPM and Nginx pods, respectively.

You will need to tell the PHP-FPM service how to find the Nginx pods as it will act as a proxy for the PHP-FPM pods. For this, you will take advantage of Kubernetes’ automatic service discovery and use human-readable names to route the request to the respective service.

In order to create any service, you will need to create a YAML file that contains the object definition. This YAML file has at least the following tags:

apiVersion: The Kubernetes API version to which the definition belongs.kind: The kind of Kubernetes object this YAML file creates. For instance: aservice, ajob, or apod.metadata: The name of the object and the differentlabelsthat the user might want to apply to this object are defined under this tag.spec: This tag contains the object specification of your object, such as ENVs, container image to be used, ports on which the container service will be accessible.

Creating the PHP-FPM service

To start with, you should create a directory to keep your Kubernetes object definition. Log in to your master node and create a directory named “definitions:”

|

1 |

mkdir definitions |

Change the directory to the definitions directory:

|

1 |

cd definitions |

Next, create your PHP-FPM service file as php_service.yaml file:

|

1 |

nano php_fpm_service.yaml |

After that, set the apiVersion and kind in the php_fpm_service.yaml file:

|

1 2 |

apiVersion: v1 kind: Service |

Name your service as php or php-fpm as it will provide access to your PHP-FPM application:

|

1 2 3 |

… Metadata: name: php |

Label your php service as tier: backend as the PHP application will run behind this service:

|

1 2 3 |

… labels: tier: backend |

A service uses the selector labels to determine which pods to access. Any pod that matches these labels, irrespective of when the pod was created, is serviced. You will learn how to add labels to your pods later in this tutorial.

Include the tier: backend label that assigns your pod into the backend tier, along with app: php-fpm label to indicate that the pod runs a PHP-FPM application. You must add these labels after the metadata section:

|

1 2 3 4 5 |

… spec: selector: app: php-fpm tier: backend |

Next, you need to declare the port to access this php-fpm service under spec. You can add any port of your choice, but we will use port 9000 in this tutorial:

|

1 2 3 4 |

... ports: - protocol: TCP port: 9000 |

Once done with the above steps, your php_fpm_service.yaml file will look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

apiVersion: v1 kind: Service metadata: name: php labels: tier: backend spec: selector: app: php tier: backend ports: - protocol: TCP port: 9000 |

Enter Ctrl + O to save the file, then enter Ctrl + X to exit nano.

Applying the kubectl command to create the PHP service

As the object definition for your service is created, run kubectl apply command with -f argument by specifying your php_fpm_service.yaml file:

|

1 |

kubectl apply -f php_fpm_service.yaml |

The output of the above command should be:

|

1 |

service/php created |

Run the below command to verify that your php-fpm service is running:

|

1 |

$ kubectl get svc |

You will be able to see the php-fpm service up and running:

|

1 2 3 |

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10m php ClusterIP 10.100.59.238 <none> 9000/TCP 5m |

Creating the Nginx service

Since your PHP-FPM service is ready now, it is time you create your Nginx service as well. Create and open a new YAML file for this service, called nginx_service.yaml in the editor:

|

1 |

$ nano nginx_service.yaml |

Name this service as nginx as it will target the Nginx pods. This service also belongs in the backend so you should add a tier: backend label to it:

|

1 2 3 4 5 6 |

apiVersion: v1 kind: Service metadata: name: nginx labels: tier: backend |

As we did in the php-fpm service, add the selector labels app: nginx and tier: backend to target the pods. Add the default HTTP port 80 to access this service:

|

1 2 3 4 5 6 7 8 |

... spec: selector: app: nginx tier: backend ports: - protocol: TCP port: 80 |

The Nginx service can be publicly accessible on the internet from the public IP address. You can add your worker node’s IP as your_public_ip. Add the below lines under spec.externalIPs:

|

1 2 3 4 5 |

... spec: externalIPs: - your_public_ip |

Your nginx_service.yaml file should look like the one below once you complete all of the above steps:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

apiVersion: v1 kind: Service metadata: name: nginx labels: tier: backend spec: selector: app: nginx tier: backend ports: - protocol: TCP port: 80 externalIPs: - your_public_ip |

Save and close the file after adding all the required parameters above.

Applying the kubectl command to create the Nginx service

|

1 |

kubectl apply -f nginx_service.yaml |

|

1 |

service/nginx created |

|

1 |

$ kubectl get svc |

|

1 2 3 4 |

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13m nginx ClusterIP 10.102.160.47 your_public_ip 80/TCP 50s php ClusterIP 10.100.59.238 <none> 9000/TCP 8m |

|

1 |

$ kubectl delete svc/service_name |

Step 2: Create Local Storage and Persistent Volume

Kubernetes provides various storage plug-ins that help you create storage space for your environment. This step will guide you on how to create a local StorageClass and how this Storage Class can be further used for creating Persistent Volume.

Creating a local storage

Create a file, say storageClass.yaml, in your editor:

|

1 |

$nano storageClass.yaml |

Add kind as "storageClass" and apiVersion as "storage.k8s.io/v1" as follows:

|

1 2 |

kind: StorageClass apiVersion: storage.k8s.io/v1 |

Name this StorageClass as "my-local-storage" and add provisioner and volumeBindingMode as follows:

|

1 2 3 4 5 |

… metadata: name: my-local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: Immediate |

Save and exit the file and your final storageClass.yaml file should look like this:

|

1 2 3 4 5 6 |

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: my-local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer |

Now, create the StorageClass by running the kubectl create command, as below:

|

1 |

$ kubectl create -f storageClass.yaml |

After running the above command, you should get the below output:

|

1 |

storageclass.storage.k8s.io/my-local-storage created |

Creating local Persistent Volume

After creating Local Storage, you can create your local Persistent Volume. A Persistent Volume, also known as PV, is the specified-sized block storage that is independent of the life cycle of a pod. A local Persistent Volume is nothing but a local disk or a directory that is available on a Kubernetes cluster node. This local Persistent Volume allows its users to access the local storage by using a local Persistent Volume Claim in a very simple yet portable manner. You can create this local Persistent Volume by using this storage class we just created. Open a file, say persistentVolume.yaml, in your editor:

|

1 |

$ nano persistentVolume.yaml |

Give this persistent volume a name, say "my-local-pv":

|

1 2 3 4 |

apiVersion: v1 kind: PersistentVolume metadata: name: my-local-pv |

You can add storage capacity as per your usage while creating a local Persistent Volume. In this tutorial, we will use 5 Gi for the storage:

|

1 2 3 4 |

… spec: capacity: storage: 5Gi |

Add the accessModes, persistentVolumeReclaimPolicy, and provide the storageClassName same as used in storageClass.yaml:

|

1 2 3 4 5 |

… accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: my-local-storage |

Add the local.path for your Persistent Volume as below:

|

1 2 3 |

… local: path: /mnt/disk/vol |

After adding all the required fields, your persistentVolume.yaml file should look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

apiVersion: v1 kind: PersistentVolume metadata: name: my-local-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: my-local-storage local: path: /mnt/disk/vol nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - worker |

Preparing Local Volume

Now, we need to prepare a local volume on the “worker” node as we have added in the persistentVolume.yaml file. Run the below commands on the node that you have configured in persistentVolume. In this case, it is “worker” node:

|

1 2 3 |

$ DIRNAME="vol" $ mkdir -p /mnt/disk/$DIRNAME $ chmod 777 /mnt/disk/$DIRNAME |

Run the below command on the master node where your persistentVolume.yaml file is present:

|

1 |

kubectl create -f persistentVolume.yaml |

You should get the below output:

|

1 |

persistentvolume/my-local-pv created |

Since you have successfully created your local storage and Persistent Volume, you can now go ahead and create a Persistent Volume Claim to hold your application code and configuration files.

Step 3: Create the Persistent Volume

Your application code needs to be kept safe while you manage or update your pods. For this, you will use the Persistent Volume, created in the previous step, which is accessed by using a PersistenVolumeClaim, or PVC. This PVC mounts the PV at the required path.

Open a file, say code_volume.yaml, in your editor:

|

1 |

$ nano code_volume.yaml |

Name your PVC as code by adding the below parameters and values to your file:

|

1 2 3 4 |

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: code |

The spec section of a PVC has the following items:

- accessModes: There are various possible values for this field as follows:

- ReadWriteOnce – Mounts the volume for a single node with both read and write permissions.

- ReadOnlyMany – Mounts the volume for many nodes with only read permission.

- ReadWriteMany – Mounts the volume for many nodes with both read and write permissions.

- resources: Defines the required storage space.

Since the local storage is mounted only to a single node, you will need to set the accessMode to ReadWriteOnce. In this tutorial you will add only a small chunk of application code, hence 1GB of storage will be sufficient here. However, if you wish to store a larger amount of data or code, you can modify the storage parameter according to your requirements. Note that once the volume is created, you will be able to increase the storage size. However, decreasing it is not supported:

|

1 2 3 4 5 6 7 |

... spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi |

Now, declare the storage class that the Kubernetes cluster will use to allocate to the volumes. Use the my-local-storage storage class, created in the previous step, here for your storageClassName:

|

1 2 |

... storageClassName: my-local-storage |

After completing the above steps, your code_volume.yaml file should look like this:

|

1 2 3 4 5 6 7 8 9 10 11 |

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: code spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: my-local-storage |

Now save and exit the file.

Creating PVC

Create the code PVC by running the kubectl apply command:

|

1 |

$ kubectl apply -f code_volume.yaml |

You should get the following output that indicates that the object was successfully created and ready to be able to mount your 1GB PVC as volume:

|

1 |

persistentvolumeclaim/code created |

You can execute the following command to check the available Persistent Volume (PV):

|

1 |

$ kubectl get pv |

The output of the above command should be as follows:

|

1 2 |

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-ca4df10f-ab8c-11e8-b89d-12331aa95b13 1Gi RWO Delete Bound default/code do-block-storage 2m |

All the above fields, except for Reclaim Policy and Status, are an overview of your configuration file. The Reclaim Policy defines what happens to the PV once the PVC accessing it is deleted. The value Delete removes the PV from the Kubernetes cluster as well as from the storage infrastructure. You can refer to the Kubernetes PV documentation to have a clear understanding of Reclaim Policy and Status.

You can now create your pods using a Deployment as you have successfully created your Persistent Volume using the local storage.

Step 4: Create Deployment for your PHP-FPM Application

This step will help you create your PHP-FPM pod using Deployment. Deployment uses ReplicaSets to provide a stable way to create, update, and manage your pods. A Deployment automatically rolls back its pods to a previous image.

The spec.selector key in the Deployment lists all the labels of the pods it manages. It also uses the template key to create the pods that are required.

In this step, we will also introduce the application of Init Containers. The Init Containers run few commands before the regular containers that are specified under the pod’s template. Here, the Init Container will use GitHub Gist (https://gist.github.com/) to get a sample index.php file. The contents of the sample file are:

|

1 2 |

<?php echo phpinfo(); |

Creating PHP Deployment

Open a new file named php_deployment.yaml in your editor to create your Deployment:

|

1 |

$ nano php_deployment.yaml |

Now, name the Deployment object PHP as this Deployment will manage your PHP-FPM pods. Add the label tier: backend because the pod will belong to the backend tier:

|

1 2 3 4 5 6 |

apiVersion: apps/v1 kind: Deployment metadata: name: php labels: tier: backend |

Using the replica parameter, specify the number of copies of this pod that should be created. The number of replicas may vary based on your requirements and the available resources. In this tutorial, you will create only one replica of your pod:

|

1 2 3 4 |

... spec: replicas: 1 |

Add app: php and tier:backend labels under selector key that denotes that this Deployment will manage pods that match these two labels:

|

1 2 3 4 5 |

... selector: matchLabels: app: php tier: backend |

Now, your pod’s object definition needs a template under your Deployment spec. This template defines the specification that is needed to create your pod. To start with, add the labels that were specified for the php service selector and the matchLabels of the Deployment. Then add app:php and tier:backend under template.metadata.labels:

|

1 2 3 4 5 6 7 |

... template: metadata: labels: app: php tier: backend |

First, you need to specify all the volumes that your containers will access. Name this volume code as you had created a PVC named code to hold your application code:

|

1 2 3 4 5 6 |

... spec: volumes: - name: code persistentVolumeClaim: claimName: code |

Next, specify the container name along with the image that you want to run inside your pod. There are various images available on the Docker store (https://hub.docker.com/explore/), but in this tutorial, we will use the php:7-fpm image:

|

1 2 3 4 |

... containers: - name: php image: php:7-fpm |

Now, mount the volumes to which the container requires access. Since this container will run your php code, it will need access to the code volume created in the previous step. In this step, you will also learn how to copy your application code using Init Container.

To download the code, this tutorial will guide you on how to use a single Init Container with busybox. Busybox is a small container with wget utility that you will use to achieve this.

First, add your initContainer under spec.template.spec and specify the busybox image:

|

1 2 3 4 |

... initContainers: - name: install image: busybox |

Then, in order to download the code in the code volume, your Init Container will need access to it. Mount the volume code at the /code path under spec.template.spec.initContainers:

|

1 2 3 4 |

... volumeMounts: - name: code mountPath: /code |

Every Init Container requires to run a command. This Init Container will use wget to download the code from Github into the /code directory. You can pass a -O option to give this downloaded file a name, and you can name this file index.php.

In addition, add the below lines under install container in spec.template.spec.initContainers:

|

1 2 3 4 5 6 |

... command: - wget - "-O" - "/code/index.php" - https://raw.githubusercontent.com/do-community/php-kubernetes/master/index.php |

After you complete all these steps, your php_deployment.yaml file should look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

apiVersion: apps/v1 kind: Deployment metadata: name: php labels: tier: backend spec: replicas: 1 selector: matchLabels: app: php tier: backend template: metadata: labels: app: php tier: backend spec: volumes: - name: code persistentVolumeClaim: claimName: code containers: - name: php image: php:7-fpm volumeMounts: - name: code mountPath: /code initContainers: - name: install image: busybox volumeMounts: - name: code mountPath: /code command: - wget - "-O" - "/code/index.php" - https://raw.githubusercontent.com/do-community/php-kubernetes/master/index.php |

You can now save the file and exit. Next, create your PHP-FPM Deployment using kubectl apply command:

|

1 |

$ kubectl apply -f php_deployment.yaml |

Successful creation of the Deployment should give you the below output:

|

1 |

deployment.apps/php created |

This Deployment starts by downloading the specified images, then it will request the PersistentVolume from your PersistentVolumeClaim, and then run your initContainers. Once this step is done, the containers will run and mount the volumes to the specified mount point. After completing all these steps your pod will be up and running.

You can run the below command to view your Deployment:

|

1 |

$ kubectl get deployments |

After running the above command, you should get the below output:

|

1 2 |

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE php 1 1 1 0 19s |

You can understand the current state of the Deployment with the help of this output. A Deployment is a controller that maintains the desired state. The DESIRED field specifies that it has 1 replica of the pod named php. The CURRENT field indicates how many replicas of the DESIRED state are running at present. For a healthy pod, this should match the DESIRED state. You can learn more about the remaining fields on the Kubernetes Deployments Documentation.

After that, to check the status of your running pod, you can run the below command:

|

1 |

$ kubectl get pods |

The output of this command can vary depending on the time that has passed since you created your Deployment. If it is run shortly after creating the Deployment, the output will be similar to:

|

1 2 |

NAME READY STATUS RESTARTS AGE php-5d8f6bbf7f-56df2 0/1 Init:0/1 0 9s |

Explanation:

These columns represent the information as below:

- Ready: The number of current/desired replicas running this pod.

- Status: The status of your pod. Init:0/1 indicates that the Init Containers are running and 0 out of 1 Init Containers have finished running.

- Restarts: This indicated the number of times this process has restarted to start the pod.

Your pod can take a few minutes for the status to change to podInitializing depending on the complexity of your startup scripts:

|

1 2 |

NAME READY STATUS RESTARTS AGE php-5d8f6bbf7f-56df2 0/1 podInitializing 0 39s |

This indicates that the Init Containers have run successfully and now, the containers are initializing:

|

1 2 |

NAME READY STATUS RESTARTS AGE php-5d8f6bbf7f-56df2 1/1 Running 0 4m10s |

As you can see now, your pod is up and running. However, in case your pod does not start, you can run the below commands for debugging purposes:

1. To view detailed information of the pod:

|

1 |

$ kubectl describe pods pod-name |

2. To view the logs of the pod:

|

1 |

$ kubectl logs pod-name |

3. To view the logs of a specific container in the pod:

|

1 |

$ kubectl logs pod-name container-name |

Congratulations! You have successfully mounted the application code and the PHP-FPM service is ready to handle connections. Similarly, you can create your Nginx Deployment.

Step 5: Create your Nginx Deployment

This step will guide you on how to configure Nginx using a ConfigMap. A ConfigMap keeps all your required configurations in a key-value format that will be used in other Kubernetes object definitions. With this approach, you will have the flexibility to reuse or swap the Nginx image with a different version, as and when required. You can update the ConfigMap and it will automatically replicate those changes to any pod that is mounting this ConfigMap.

To begin with, open a nginx_configmap.yaml file in your editor:

|

1 |

$ nano nginx_configMap.yaml |

Now, name this ConfigMap as nginx-config and add it to tier: backend microservice:

|

1 2 3 4 5 6 |

apiVersion: v1 kind: ConfigMap metadata: name: nginx-config labels: tier: backend |

In addition, you can add the data to ConfigMap. Add a key named config and add all the Nginx configuration file contents as the value.

Since it is possible for Kubernetes to route requests to the respective hosts for a service, you can enter the name of your PHP-FPM service under the fastcgi_pass parameter instead of its IP address. Add the following lines of code to your nginx_configMap.yaml file:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

... data: config : | server { index index.php index.html; error_log /var/log/nginx/error.log; access_log /var/log/nginx/access.log; root /code; location / { try_files $uri $uri/ /index.php?$query_string; } location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_pass php:9000; fastcgi_index index.php; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $fastcgi_path_info; } } |

Once completed, your nginx_configMap.yaml file will look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

apiVersion: v1 kind: ConfigMap metadata: name: nginx-config labels: tier: backend data: config : | server { index index.php index.html; error_log /var/log/nginx/error.log; access_log /var/log/nginx/access.log; root /code; location / { try_files $uri $uri/ /index.php?$query_string; } location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_pass php:9000; fastcgi_index index.php; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $fastcgi_path_info; } } |

You can now save and exit the editor. Now execute the kubectl apply the command to create the ConfigMap:

|

1 |

$ kubectl apply -f nginx_configMap.yaml |

After that, you should see the below output on your screen:

|

1 |

configmap/nginx-config created |

You have successfully created your Nginx Configmap. Now you can create your Nginx Deployment.

Creating Nginx Deployment

To begin with, you can create a new file named nginx_deployment.yaml in the editor:

|

1 |

$ nano nginx_deployment.yaml |

Name this Deployment nginx and add the tier: backend label to it:

|

1 2 3 4 5 6 7 |

apiVersion: apps/v1 kind: Deployment metadata: name: nginx labels: tier: backend |

After that, specify the replica count by adding replica field in the Deployment spec and add app: nginx and tier: backend labels to it:

|

1 2 3 4 5 6 7 |

... spec: replicas: 1 selector: matchLabels: app: nginx tier: backend |

Similarly, add the pod template. Make sure you add the same labels that you had added in the Deployment’s selector.matchLabels. You can add the following:

|

1 2 3 4 5 6 |

... template: metadata: labels: app: nginx tier: backend |

Give Nginx access to the code PVC that was created earlier by adding the following parameters under spec.template.spec.volumes:

|

1 2 3 4 5 6 |

... spec: volumes: - name: code persistentVolumeClaim: claimName: code |

|

1 2 3 4 5 6 7 |

... - name: config configMap: name: nginx-config items: - key: config path: site.conf |

Now, specify the name, image, and port that you want to use in your pod. Here, we will use nginx:1.7.9 image and port 80. Add them under spec.template.spec section:

|

1 2 3 4 5 6 |

... containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80 |

Also, mount the code volume at /code as both Nginx and PHP-FPM will need to access the file at the same path:

|

1 2 3 4 |

... volumeMounts: - name: code mountPath: /code |

The nginx-1.7.9 image automatically loads any configuration file under /etc/nginx/conf.d folder. Now, if we mount the config volume in this directory, it will create /etc/nginx/conf.d/site.conf. Add the following under volumeMount section:

|

1 2 3 |

... - name: config mountPath: /etc/nginx/conf.d |

After completing all the above step, your nginx_deployment.yaml file should look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

apiVersion: apps/v1 kind: Deployment metadata: name: nginx labels: tier: backend spec: replicas: 1 selector: matchLabels: app: nginx tier: backend template: metadata: labels: app: nginx tier: backend spec: volumes: - name: code persistentVolumeClaim: claimName: code - name: config configMap: name: nginx-config items: - key: config path: site.conf containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80 volumeMounts: - name: code mountPath: /code - name: config mountPath: /etc/nginx/conf.d |

You can now save and exit the file and create Nginx Deployment by running the following command:

|

1 |

$ kubectl apply -f nginx_deployment.yaml |

On successful execution of the command, you should see the following output:

|

1 |

deployment.apps/nginx created |

You can list all your Deployments by executing the below commands:

|

1 |

$ kubectl get deployments |

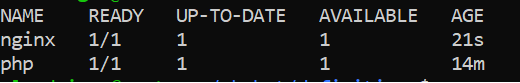

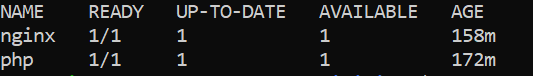

You should now see both the Nginx and PHP-FPM Deployments:

Further, you can execute the following command to list the pods that are managed by both the Deployments listed above:

|

1 |

$ kubectl get pods |

You will see that both your pods are up and running like following:

Since all your Kubernetes objects are active at this point, you can now access the Nginx service on your browser.

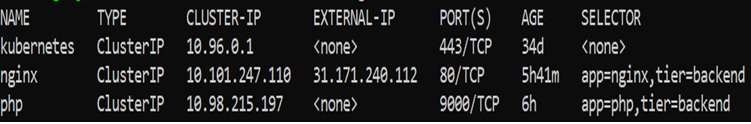

Run the following command to list the services:

|

1 |

$ kubectl get services -o wide |

Note down the External IP of your Nginx service:

Now, using this External IP of Nginx service, you can visit your server by typing http://your_public_ip on your browser. You should be able to see the output of php_info() that confirms that your Kubernetes services are up and running.

Conclusion

In this tutorial, to manage your PHP-FPM and Nginx services independently, you containerized the two services. By doing so, not only you will improve the scalability of your project, but you will also use your resources efficiently. You also learned how to create local storage and a Persistent Volume to store your application code on a volume and be able to easily update your services in the future. By doing so, you improved the usability and maintainability of your code.

Furthermore, take a look at our other tutorials focusing on Docker and Kubernetes that you can find on our blog:

- Getting to Know Kubernetes

- How To Create a Kubernetes Cluster Using Kubeadm on Ubuntu 18.04

- Clean Up Docker Resources – Images, Containers, and Volumes

- Deploying Laravel, Nginx, and MySQL with Docker Compose

- How to install & operate Docker on Ubuntu in the public cloud

Happy Computing!

- How To Enable, Create and Use the .htaccess File: A Tutorial - March 8, 2023

- An Overview of Queries in MySQL - October 28, 2022

- Introduction to Cookies: Understanding and Working with JavaScript Cookies - October 25, 2022

- An Overview of Data Types in Ruby - October 24, 2022

- The Architecture of Iptables and Netfilter - October 10, 2022